Hello again with a slightly delayed November Update for openage.

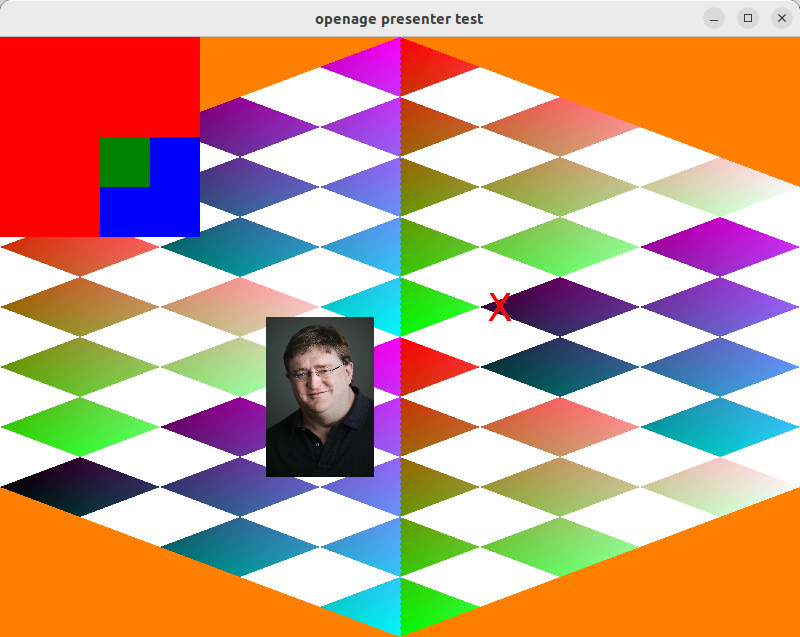

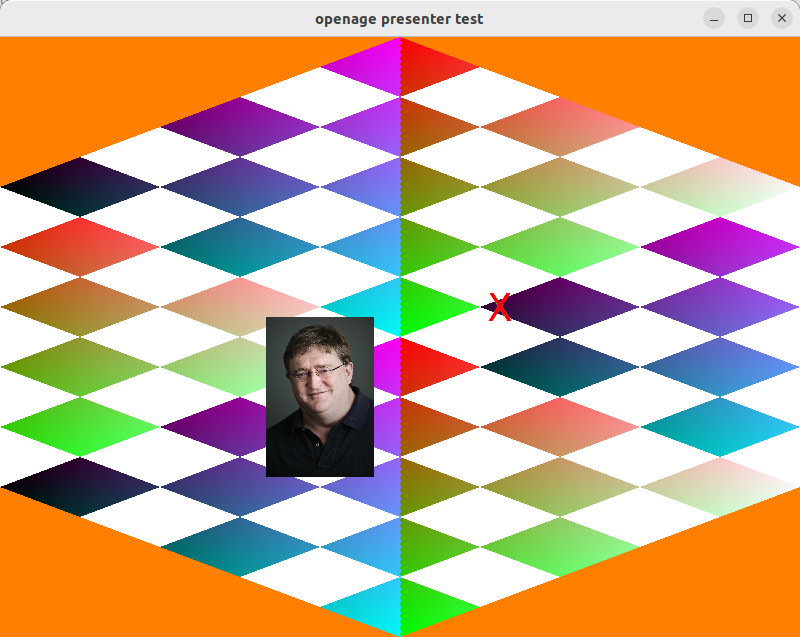

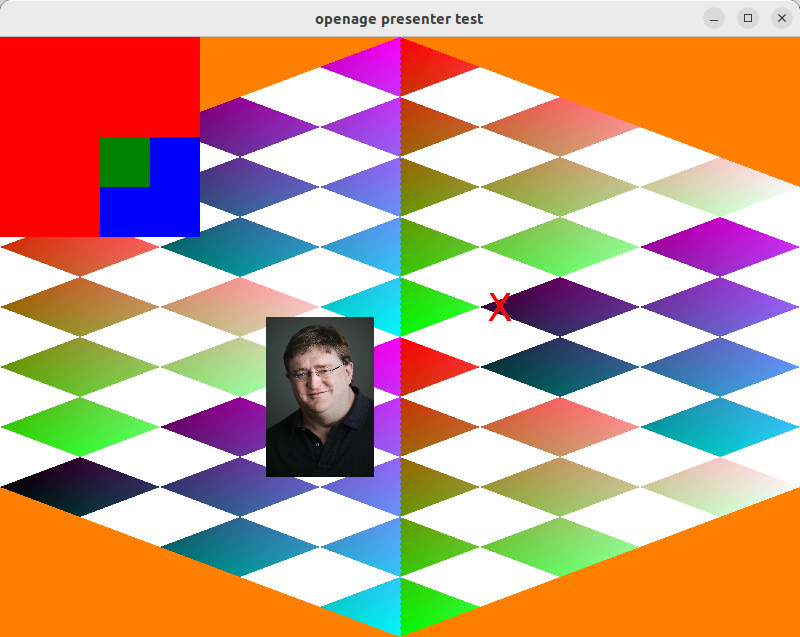

Last month's post had an unfortunate lack of images, so I hope we can make up to it this time. With this in mind, we can present you a wonky, weirdly colored screenshot taken straight from the current build.

It probably doesn't look like much, but it actually already shows the usage of rendering components directly by the engine's internal gamestate (although still making heavy use of test textures and parameters). The current build also implements the major render stages for drawing the game: terrain and unit rendering.

Gamestate to Renderer

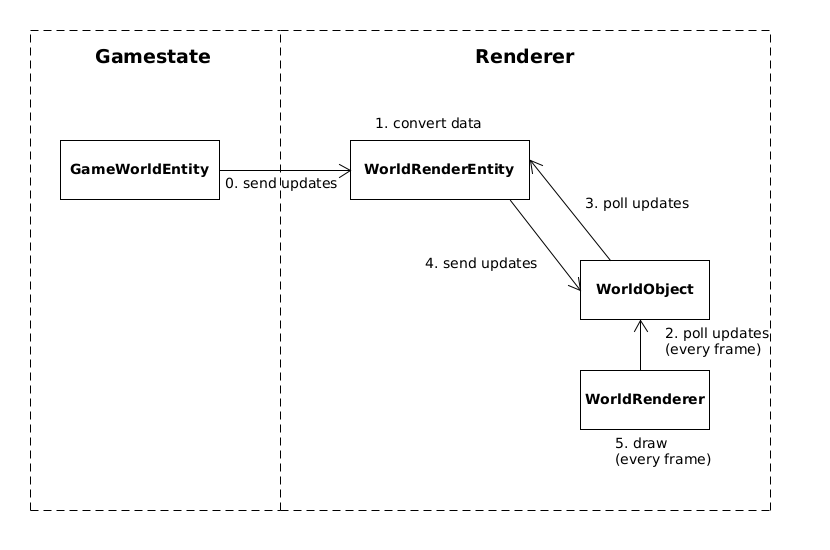

But let's backtrack a little bit and start from where we left off last month. In our last update, we talked about decoupling renderer and gamestate as much as possible, so that they don't depend on each other as much. However, the gamestate still needs to communicate with the renderer, so it can show what is happening inside the game on screen. Therefore, this month's work was focused on building a generalized pipeline from the gamestate to the renderer. Its basic workflow looks like this for unit rendering:

Left side shows the state inside the engine, right side the state inside the renderer. As you can see from the flowgraph, the gamestate never directly uses the renderer itself. Instead, it only sends information on what it wants to be drawn to the renderer via a connector object (the "render entity"). This object then converts the information from the gamestate into something the renderer can understand. For example, it may convert the position of a unit inside the game world into coordinates in the graphics scene of OpenGL.

The converted data from the render entities are then used for actual drawable objects (e.g. WorldRenderObject). These are mostly used

to store the render state of the drawable, e.g. variables for the shader or texture handles for the animations that should be displayed.

Every frame, the drawable objects poll the render entities for updates and are then drawn by the renderer. Actually, there are several

subrenders which each represent a stage in the drawing process, e.g. terrain rendering, unit rendering, GUI rendering, etc. . In the end,

the outputs of each stage are blended together and create the result shown on screen.

Here you can see how that happens behind the scenes.

- Skybox Stage: Draws the background of the scene in a single color (this would be black in AoE2).

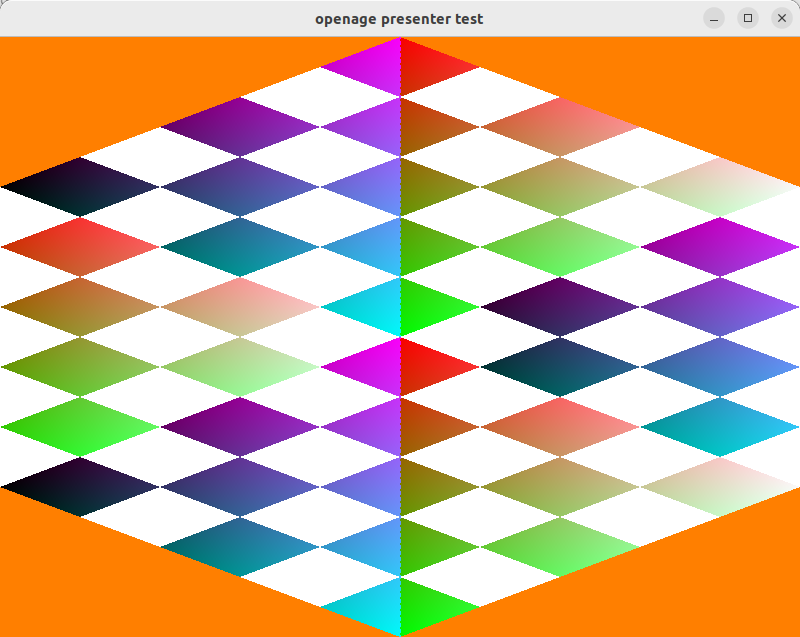

- Terrain Stage: Draws the terrain. The gamestate terrain is actually converted to a 3D mesh by the renderer which makes it much easier to texture.

- World Stage: Draws units, buildings and anything else that "physically" exists inside the game world. For now, it only draws dummy game objects that look like Gaben and the red X.

- GUI Stage: Draws the GUI from Qt QML definitions.

What's next?

With the rendering pipeline mostly done, we will probably start shifting to more work inside the gamestate. The first task here will be to get the simulation running by setting up the event loops and implementing time management. The renderer also needs rudimentary time management for correctly playing animations. Once that's done, we can play around with a dynamic gamestate that changes based on inputs from the player.

If there's enough time, we may also get around refactoring the old coordinate system for the game world. This would also be required for reimplementing camera movement in the renderer.

Questions?

Any more questions? Let us know and discuss those ideas by visiting our subreddit /r/openage!

As always, if you want to reach us directly in the dev chatroom:

- Matrix:

#sfttech:matrix.org

openage dev updates

openage dev updates